The conjugate gradient method, a numerical method for solving systems of linear algebraic equations , is an iterative method of the Krylov type .

Content

Statement of the Problem

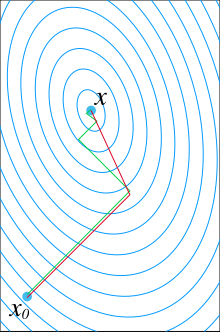

Let a system of linear equations be given: . Moreover, the matrix of the system is a real matrix with the following properties: , that is, it is a symmetric positive definite matrix . Then the process of solving SLAE can be represented as minimizing the following functionality:

Behind the scalar product is indicated. By minimizing this functional, using the Krylov subspaces , we obtain the conjugate gradient method algorithm.

Algorithm

- Preparing for the iterative process

- We choose the initial approximation

- th iteration of the method [1]

- Stop criterion

Since the minimized functional is quadratic, the process should give an answer to -th iteration, however, when implementing the method on a computer, there is an error in representing real numbers, as a result of which more iterations may be required. The accuracy can also be estimated by the relative discrepancy , and stop the iterative process when the residual becomes less than a given number.

Algorithm for a preconditioned system

Let the preconditioned system have the form: , then the algorithm of the method for such a system can be written as follows:

- Preparing for the iterative process

- We choose the initial approximation

- method iteration

- After the iterative process

- where - an approximate solution to the system, Is the total number of iterations of the method.

- Stop criterion

In this case, you can use the same stopping criteria as for a conventional system, only taking into account preconditioning. For example, the relative discrepancy will be calculated as: , however, you can use the residual of the original system, which is calculated as follows:

Features and Generalizations

Like all methods on Krylov subspaces, the conjugate gradient method only requires the matrix to be able to multiply it by a vector, which makes it possible to use special matrix storage formats (such as sparse) and save memory on matrix storage.

The method is often used to solve finite element SLAEs.

The method has generalizations: the bis conjugate gradient method for working with asymmetric matrices. And the complex conjugate gradient method, where the matrix may contain complex numbers, but must satisfy the condition: , that is, to be a self-adjoint positive definite matrix.

Notes

- ↑ Henk A. van der Vorst. Iterative Krylov Methods for Large Linear System. - Cambridge University Press, 2003 .-- 221 p. - ISBN 9780521818285 .